Easy and Cheep Why to Incress 3d Mark

While browsing for old cards (as usual), my eyes landed upon something called Savage IX, the first of S3's attempts to breach into the laptop market. While I had read about the IX in the past, there was little to no info about it, other than some very basic specs, which might or might not be correct. The card itself was cheap enough, so I thought, why not see if I can clear the confusion? So I went and ordered it. As it turns out, things are never so easy.

The card was shipped in its box. As you might expect from an old laptop product, the packaging was the tackiest thing ever, and I especially loved the manual in broken English that contained information about every possible card, except the one you just bought. Of course. I don't know who the vendor was, but even they didn't want people to know they had just bought a Savage. Figures.

Trouble started immediately, by the way. Upon installing the drivers and rebooting, Windows 98 would seemingly become unresponsive. After a long time scratching my head and deleting drivers from safe mode, I finally noticed that Windows wasn't unresponsive, rather it was in extended desktop mode. Yes, for whatever reason my monitor was set as secondary monitor (even though the card has no TV-out…) and even reinstalling the drivers didn't change it. Laptop heritage? Either way, I was ultimately able to fix it from registry. In case anyone is actually interested in testing this card, I'll save you the trouble and tell you what you need to change. Go to: "HKEY_LOCAL_MACHINE\Config\0001\Display\Settings", then to the device subfolder, and set the string AttachToDesktop to 0. And just to be sure, change the string AttachToDesktop in the main folder too.

So let's finally check the card. One final thing before we start: results have been inconsistent sometimes. At one point, 3DMark 2000 would give me a fillrate of 90MT/s, then the next time it would drop to 62MT/s. I thought maybe the chip was overheating, but it's not even warm to the touch, despite the lack of heatsink. I can only assume the drivers are terrible like that. Also, vsync can be disabled from S3Tweak, but the framerate still seems capped and the resulting flickering is reminescent of the Savage 3D, albeit not as bad. Just for the sake of testing, I disabled it.

And of course, the specs: Pentium 3 450MHZ, 128MB SDRAM PC100, i440BX-2, Windows 98, 60hz monitor.

Powerstrip first. Well, it kinda bugs out. The core clock is rated at 14Mhz and you can't even see the memory clock. It also says the data bus is 64-bits wide. That sounds more believable. I read some info on the internet that the data bus could be 128-bits wide, due to having its memory integrated in the package. But based on my results, I don't believe that. Or perhaps, it's true but makes no difference. Now, S3Tweak is slightly better, since it correctly recognizes the card and says the memory clock is 100Mhz. Could be, could be not. Unfortunately there's no indication of the core clock, but we'll get to that. MeTaL support is apparently present with version 1.0.2.5, but Adventure Pinball reverts to software rendering when I attempt to enable it. I'm going to assume it's non-functional, like many other things on this card.

Checking out System Info on 3DMark reveals a horrible truth: the card doesn't supported multitexturing at all, and Z-buffer is only 16-bits. Doesn't look like a Savage 4 to me, then. What's worse, it doesn't even support S3TC. Why they would take out that kind of feature, I don't know. It doesn't support edge anti-aliasing either, but that doesn't surprise me. Still, for a laptop card that was supposedly aimed at gamers, you'd think they would try and attract attention with some of their more popular features.

Some interesting results here. The card seems definitely closer to a Savage 3D than a Savage 4. Fillrate makes me think the SIX is clocked at 100Mhz just like its memory. Nevermind the high texture rendering speed on the S3D, that was probably due to vsync issues that caused heavy flickering. Polygon scores are higher on SIX, maybe some architecture improvement? But trilinear rendering is a lot slower. Now granted, many cards of the time weren't doing real trilinear. However, the SIX apparently isn't even attempting that, and doing something else entirely. Luckily that only happens in 3DMark, and the games seem trilinearly filtered.

As you can see, rendering quality is much closer to the Savage 3D than the Savage 4. There are however some issues with textures or maybe lightmaps. Luckily I haven't noticed them anywhere else.

So much for 3DMark 99. Some quick info about 3DMark 2000: scores are very close to the Savage 3D (again), but actually higher all around. My S3D is probably an old model for OEM sellers, presumably running at 90Mhz. I'm sure it's nowhere close to those 120Mhz Hercules chips. If the SIX is truly running at 100MHZ, then the higher results check out. Final Reality isn't really worth checking out either, especially because I lost my detailed S3D scores, but I can say that just like that card, the SIX has some serious issues with the 2D transfer rate.

And did I say that 3DMark 2000 at 1024x768x16 gives out higher scores than the Savage 3D? Well, PC Player D3D Benchmark running at 1024x768x32 gives me 28.3fps, far lower than the S3D at 35.9fps, or the S4 at 41.5fps. That 64-bits data bus is looking right at the moment.

But enough with the syntethic benchmarks, and let's have ourselves some game tests. The cards I included were as follow: Savage 3D and Savage 4 for obvious reasons. Also the Savage 2000, just for fun. Two more cards are included: the first one is the Rage LT Pro, a common laptop card for the time, albeit obviously older. The other is an Ati Rage 128 Pro Ultra. See, I kinda wanted to try and see how the Savage IX compared to the Mobility 128, another potential laptop card of its year. Unfotunately it's quite expensive. So I went for the next best thing: looking at the specs, the Rage 128 Pro is too fast thanks to its 128-bits data bus, but the Ultra variant for the OEM market is only 64-bits, which corresponds to the Mobility. Everything else is very close, except for the 16MB of memory, but for my tests that shouldn't count too much. As a whole, it should be a decent surrogate, I hope.

If nothing else, the SIX supports OpenGL, and it's even the same driver version as the S3D and S4. So we can try Quake 2 and MDK2. Results don't look flattering to start with, however. Without multitexturing, there's only so much the card can do. At 1024×768, it even falls behind the S3D despite its supposedly higher clocks. The Ultra is looking quite fast at this moment, and considering the Mobility 128 came out not much after the Savage IX, that didn't look like good news for S3. Maybe it was more expensive though. The Savage 2000 is in a league of its own, expectedly.

Exciting times for S3. in MDK2, the SIX manages to actually beat the S3D by a sizeable margin, more than the higher clock would suggest. I'll chalk that down to architecture improvements. But the drop at high resolutions is steep, which further convinces me that the data bus can't be 128-bits. At any rate, the Ultra isn't far ahead here, but the Savage 4 can't be even approached. Check out the bottom… poor Rage LT Pro.

Things get muddier in Direct3D, because as I said before, disabling vsync can show some strange flickering, plus it doesn't even seem to work all that well, as framerate in Incoming is still capped at 60fps. However, the average is higher, and it doesn't appear to be double buffered. I've disabled it for the time being, meanwhile let's cross our fingers.

Nevermind the Turok results, because T-mark was always an incredibly unreliable benchmark in my experience (a Matrox G200 beating the Geforce 2 GTS, really?). Let's take a look at Incoming instead. The three bottom cards are stuck with vsync, so the results are probably not good for a comparison. Even so, we can take a look at the Ultra and the SIX. At 640×480, there is too much flickering, and I'm afraid it's actually impacting the results. At 800×600 and 1024×768, things are much more bearable. Still, seeing the Ultra beaten by the SIX despite its dual texturing engine is quite the sight, and a far cry from the OpenGL results. In fact, even the Rage Pro can actually compete with the Ultra at 640×480.

Let's switch to 32-bits rendering and things are a bit muddier, especially at higher resolutions where vsync doesn't factor in as much. The SIX actually falls to the bottom of the pack, while the Rage 128 architecture proves itself more efficient than the competition. Either way, even with vsync disabled, I don't think the SIX could have beaten the S3D.

Again, the SIX and Rage LT are vsynced. However, as far as I could tell, the 128 Ultra was not. Despite this, you can see that it doesn't perform very well. Maybe there are some issues with D3D, since in OpenGL the card is comfortably ahead of the old Savage line. Comparisons between the SIX and the Savage cards are impossible due to the refresh rate lock, which is a shame.

Here's also Shadows of the Empire, just for kicks. None of the cards manage to render the fog properly, which is quite an achievement. Even then, the Ultra is clearly punching under its weight. What's going on? Some early bottleneck during D3D rendering?

That's all? No, there's another small surprise. Let's move to a DOS enviroment and try a few different resolutions in Quake. Mmh, what's this? No matter which resolution you use, the monitor will be set to 640x480x60. Even 320×200, which every single other card I own renders as 720×400 and 70hz, as is proper. In fact, setting a resolution higher than 640×480 will cut off part of the image. I have no idea what could be causing this. Maybe it's yet more laptop heritage? Or maybe it's somehow decided that my monitor is actually a NTSC TV. This happens even on real DOS, without loading any drivers. Whatever the reason, it means the card is no good for DOS games. When I try to play Doom, it's not as smooth as with other cards that run it properly at 70hz. It's more like playing one of those console ports. Maybe it would be good for Normality, which switched between different resolutions between gameplay and inventory. But I can't think of much else.

Well, there we have it. Conclusions? this looks like a slightly overclocked Savage 3D, yet there are clearly some architectural improvements. It fixes some of the bugs of the Savage 3D, after all. I'm still very hesitant to call it an off-shoot of the Savage 4, as I've read around. It lacks too many features, it's slow, and there was no point for S3 to drop the texture merging feature of their more successful chip. The lack of S3TC is equally disappointing for a card supposedly aimed at the gamers market, even if we were talking about the budget-conscious ones. I also don't believe the data bus is really 128 bits. On the other hand, even with vsync enabled, the card does look like it would have outperformed the Mobility 128 in D3D, despite its lack of multitexturing. OpenGL shows a different story, however, and the Ati architecture is indeed stronger on paper. It's still weird that the card manages to be slower than the S3D in Quake 2, and drops further at higher resolutions in MDK2. That's hard to explain. Maybe data bandwidth is lower. I should mention that Powerstrip set itself to 60Mhz when I tried to check the default clock, but I didn't want to risk enabling it. Besides, the fillrate score from 3DMark 99 doesn't seem to support that idea.

I wish I could have disabled vsync properly, since all other Savage cards let me do it. But I suppose things never go as planned. This was still an interesting card to test, with a few surprises along the way (and some swearing too). I don't think I would have wanted to find one in my laptop though.

Some days ago, my motherboard died. Or so I thought. See, when your PC only turns on without beeping or showing anything on the screen, my first thought is "reset the CMOS". If that doesn't work, "try a different power supply". Only if that doesn't work either, I change the mobo. Unfortunately, in my haste, I skipped the second step.

So after changing the motherboard and noticing it still didn't work, I tried the power supply. That made it! Except… the computer was now turning on with the different motherboard. Too late, I didn't think about it. Windows 98 suddenly got messed up, and after a couple reboots where things seemed fine, it stopped seeing almost every peripheral in the computer. And that was the chance I needed to reset everything.

For a change, I put Windows 95 again. Nothing against Windows 98, but I do remember it was slightly harder to run DOS games properly on it for some reason, even though both 95 OSR2 and 98 run the same version of MS-DOS.

I even put a Virge in the case during the installation process, to make sure everything would go as smoothly as possible. I don't think there's a more supported card than the Virge out there. And sure enough, the installation was smooth and flawless. I also found a really good USB driver, called XUSBUPP, which was a lot easier to install than Microsoft's own driver. I remember that one would completely ruin your Windows installation if you messed up.

Well, there's just one problem. Ok, a few problems. First one is, the mouse wheel doesn't work – perhaps I can look into fixing it. Another problem is just how rough the thing feels at times – you can't choose which version of the driver to install from an INF file, it seems.

The other problem is 3D Mark 99. I thought it would just work the same, but it turns out it won't recognize my Pentium 3 as a Pentium 3. Perhaps that required Windows 98… anyway, I'm stuck with basic Intel optimization, which means my CPU score went down from 7000 to 4500.

(that also shows us that there isn't much difference at all between Pentium 2 and 3, aside from the higher clocks, if you aren't employing some optimization. The P2-350mhz scored roughly 3500, which perfectly matches the 4500 scored by the P3-450mhz)

This would make further card testing partially useless, depending on the card employed. More powerful chips like the TNT2 and Voodoo 3 obtain a fairly lower score. Of course, it would not affect other game tests, since nothing else in my benchmark suite had those P3 optimizations.

Nor, it seems, does it affect less powerful cards in a meaningful manner. The Trident Blade 3D – which I momentarily elected as card of choice due to needing something that could install quickly and painlessly, much like the Virge but less crappy – had its score going down from 2850 to 2750. That sounds like the GPU is bottlenecking the CPU instead. The G250 also went down a measly 50 points, from 3050 to to 3000. And weaker cards should be even closer. I do have an interesting 1996 thing coming up, and also waiting to see if my bid on a SiS 6326 gets through. So those should be safe for testing.

The last problem? I forgot to back up my old 3DMark database results. Sigh.

Edit 19/12/16:I eventually managed to get a standard CPU result with the Matrox G100. With that, the score goes all the way up to 560! Amazing (not really)! However, for some reason, the CPU still runs slower at times. Occasionally, restarting the computer fixes it, but not all the times. I'm not sure what causes it, but for fairness' sake (and also laziness), I'll leave the old results untouched.

Original: It took a while, but my 3DMark 99 marathon is finally over. I thought I would share the results (for posterity, you know) and also drop in a few comments about each card, seeing as how data doesn't mean much without explanations.

Let me preface this wall of text by mentioning my system specs:

– Pentium III 450mhz

– 128MB SDRAM PC-100

– Windows 98

And the default settings for 3DMark 99 are:

– 800×600 screen resolution

– 16 bits color depth

– 16 bits Z-Buffer

– Triple Buffering

These settings require 3.67MB of video memory (0.92MB for each buffer, including depth), therefore you'll need at least a 4MB card to run the default test. And even then, you won't have a lot of memory left for textures. That's why some of the tests are run in 640×480 and Double Buffering. That way, you only need 1.77MB, and even a 2MB card can make it… however badly.

I also realize that my CPU is both overpowered for some of the oldest cards in there, and underpowered for some of the more powerful cards. Ideally, you'll want at least a good 600mhz to take advantage of a Voodoo 3 and G400, not to mention the GF2 GTS which would be best served by a 900mhz at least. I still have a faster P3-700 tucked around. But I didn't want the CPU to influence the results too much on the slower cards' side, so I thought this was best.

But enough of my yapping. Let's have some results.

Well, there are certainly a few weird things here. Even at a glance, you might notice some decidedly unexpected results. Let's take a look at each, starting from the bottom.

S3 Virge/DX 2MB

The good old graphics decelerator, is there any way in which it won't disappoint us? Well, admittedly, the feature set is fairly complete, including mipmaps and trilinear filtering. The lack of score is not due to the horribly low results, but because 3DMark would crash whenever I tried to run the 2nd test (I guess 2MB is really not enough…), forcing me to disable it, thus invalidating the score. But judging from other intermediate results, I'd be surprised if this card went above 150 or so. In truth, I'm amazed that textures are actually displayed correctly in the first game test. Most 4MB cards would simply blank them. But what's the point, when it ends up running at roughly 2fps?

S3 Trio 3D 4MB

I've already mentioned this card before, and now you see the numbers. There are actually two results here, one at default settings and one at 640×480 Double Buffered (like the Virge), just to see if it made a difference. As you can see, it did – in an unexpected manner. Since the card doesn't even bother trying to render most of the tests in 800×600, the score turns out higher. The dithering is some of the worst I've ever seen, too.

Ati 3D Rage IIc 8MB

Yes, this is actually an AGP card. No, I don't think it has anything other than electrical compatibility. It gets some of the worst results alongside the Virge and Trio. Bilinear filtering is ridiculously inefficient (see below) and looks terrible (see above). Mipmapping doesn't work, so of course there's no real trilinear support. At least the on-board 8MB allows it to run textures at their highest resolution. That much memory feels wasted on this kind of card, really.

Matrox G100 Productiva 4MB

What a strange card. It lacks alpha blending, subpixel precision is horrible, as is bilinear filtering. The chip seems derived straight from the Mystique, but overall improved AND gimped. It's faster, textures actually work most of the time, and it supports bilinear and mipmapping, however badly. On the other hand, alpha stippling is perhaps even worse than the Mystique, and overall it's still pretty crappy. Strangely, the card scores higher in multitexturing, but since the Mystique and a few other old cards (such as the Virge) do the same, I'd rather think of it as a bug. After all, even the G200 didn't have it. I should also mention another thing: this is the only card to get a lower score in the CPU test. It doesn't make any sense, because that test doesn't depend on the graphics card. Every other card obtains the same score (roughly 7000), give or take a few points. Why does the G100 only get 5200 points? I really can't say.

Ati Rage LT Pro 8MB

We're finally leaving Horribleville to get into something decent. The LT Pro was still almost a mobile chipset, so scores are overall very low, but the tests all run properly. With one caveat: mipmapping doesn't seem to work. I could expect that from the Rage IIc, but from something off the Pro series? How strange. The card even gets a small boost with multitexturing. Bilinear quality is also a notch above the Rage IIc.

Matrox Mystique 170 4MB

A step to the side. I said we were out of the horrible territory? Sorry, there was still this one last obstacle. This card utterly fails almost every test, rendering a black screen instead. Hence, it gets fast framerates. Hence, it gets a high score. And that's why you shouldn't trust synthetic benchmarks too much. Textures are completely absent in the game tests, so why do we get 4x the score in multitexturing? Who knows. At this point, I'm convinced it's simply a bug.

S3 Savage 3D 8MB

Okay, now it gets better for real. The Savage 3D is quite good, although at the time it was hampered by poor yields and immature drivers. Whichever version you choose, you are bound to have problems. I'm using an engineering release from 1999, which does help in OpenGL games, but also causes issues in other games like Forsaken. 3DMark works though, so there's that. And it aces every quality test too: that's quite something. On the other hand, 3D scores are low across the board, it lacks multitexturing, and texturing speed drops to an utter crawl when the card runs out of local and non-local memory. Really, other cards don't drop as much. I wonder why it's so bad here. But overall, a somewhat usable card for the time, if you didn't mind juggling drivers for every game.

Intel 740 8MB

Ah, Intel's famous failed experiment. Use the on-board memory as framebuffer alone, and rely on system memory for textures! What could possibly go wrong? It at least ended up as the base for their GMA line, so that's not too bad… wait, the GMA line sucked. Oh well. Framerates are bad and fillrate is really low, 48MT/s, comparable to the Rage LT Pro with multitexturing (which the I740 doesn't have), and don't forget that was supposed to be a mobile chipset. But image quality is great. For some reason there's no 32-bits support at all, but given the overall speed, it would have been unusable anyway.

Matrox G200A 8MB

The G200 was the first actually decent card by Matrox. It supported OpenGL for a start, though speed was still the worst, and I saw some bugs too (performance in Quake 2 suddenly drops by about 25-30% after a few repeated tests, and I don't think it's throttling). But at least it's kinda fast. Bilinear is not that great yet, but it's a step above the G100 for sure, and at the time only S3 and 3dfx did better. Fillrate is lower than the Savage 3D, but game speed is faster. Trilinear is somewhat slow though. Not a bad choice overall. The G200A, which I have, is simply a die-shrink which allowed the card to run without a heatsink. But it gets kinda hot, I must admit.

Nvidia TNT 8MB (Vanta?)

The most mysterious among my cards, this one is recognized by both Powerstrip and the system itself (including the BIOS) as a regular TNT. But it's too slow, and its clock is a mere 80mhz. However, it clearly supports multitexturing, and not badly either (almost doubling from 60MT/s to 110MT/s). Overall, I'm not sure what it is. I have a feeling that it might be either a downclocked OEM version, or some kind of Vanta. But did it really need active cooling? Well, image quality is great at least.

Matrox G250 8MB

Not much to say here. It's an overclocked G200A, no more, no less. With frequencies at 105/140mhz, compared to its predecessor's 84/112mhz, scores should be roughly 25% higher across the board – and lo, they are. In spite of the higher clocks, it doesn't have a heatsink. According to Wikipedia, the card itself should be clocked at a maximum of 96/128mhz, but my model defaults higher. That's why I've just edited the page. Hehehe.

Matrox G550 32MB

Wow, that's quite the jump. I wanted to talk about the G400 before this one, since the G550 is essentially a die shrink with an extra TMU per pixel pipeline, and DDR-64 memory instead of SDR-128. Sounds better? Well, the DDR memory actually makes it slower overall, and the extra TMU per pixel pipeline (for a total of 4 TMUs) doesn't help all that much. I can't check the frequencies because Powerstrip doesn't recognize the card, but looking at that single-texturing fillrate, they should be the same as the G400. Multitexturing only gains about 20%. Quality is great, but overall this card seems pretty unstable under Windows 98, at least on the latest drivers, so I wouldn't bother.

Nvidia Geforce 2 GTS 32MB

Holy crap. Why is this one so low? Various test results are ridiculously high, multitexturing fillrate is almost 3 times better than the second highest score (freaking 840MT/s!). So why? Well, it seems that 3DMark 99 places a bit too much emphasis on the game tests. And for some reason, the Geforce 2 doesn't do all that well in those two tests, even though it stomps all over the other ones. Nothing we can do about that, I'm afraid, other than shake our heads in disappointment at MadOnion (Futuremark now). Quality is expectedly great.

3dfx Voodoo 3 3000 16MB

A pretty common card for several reasons: fast, good quality overall, Glide support and non-horrible OpenGL. Still, it has its issues. According to 3DMark (yeah, yeah) its texture memory is limited to 5MB. Probably wrong – it must be checking the maximum available framebuffer size for my monitor (1280×1024) and compensating for it. Admittedly however, I've seen in the past that the card suffers from some strangely blurry textures, and Tonic Trouble doesn't look its best either. The Voodoo 3 is still limited, for multitexturing, to games that specifically support that feature: otherwise your fillrate is cut by half. And yes, single-texturing is slower than the G400 indeed. Quality is great, except for textures, but trilinear filering is still pretty slow. Anyway, one has to wonder if 3dfx shouldn't have at least featured AGP memory. Too late now.

Ati Rage 128 GL 16MB

I acquired this card just recently, and it was the main reason for attempting this whole endeavor in the first place. It is both good and bad. Ati still stuck with their bad bilinear filtering implementation (in 1999?), but at least performance doesn't suffer as much as the older ones. It's pretty fast overall, but fillrate is not that good, and multitexturing is also pretty inefficient. Not a bad choice, but why use this when there's better stuff around? Still, probably Ati's first truly viable chip.

Nvidia TNT2 M64 32MB

One of the most common graphic cards around, and thus one of the easiest to find on auction sites and garage sales. Quality is good, as expected of something that came out fairly late, and overall it doesn't lack anything, although it doesn't excel in anything either. Quite the "boring" card for sure. Multi-texturing could be more efficient.

Matrox G400 32MB

The current champion, at least according to this test. It has great image quality (including good bilinear from Matrox for the first time), it's fast, contains lots of memory on board and even some extra non-local memory, it's better than the DDR-64 version, and its parallel pixel pipelines with one TMU each meant there was no performance deficit if the game didn't support multi-texturing, as the card simply single-textured at twice the rate. Impressive. Even OpenGL support wasn't all that bad. Of course, the Geforce 2 destroys it in real life scenarios, and the Voodoo 3 is a bit faster in truth. But we're talking about 3DMark here, and the G400 wins. Not to mention, the Geforce 2 doesn't run as well with DOS games. So there you go.

That's a lot of cards. Too bad I don't have a PowerVR anymore, and my Voodoo is broken, and so are my Voodoo 2 and Banshee… damn, old stuff really is fragile. And expensive. Did you know a Voodoo 4 will easily run you over 100 euro? I'm afraid it's true. So I need to aim lower. For my next card, I'd like to get a proper TNT2 Pro, not a M64 this time… and maybe also something older like a Trident or SiS.

While still working on-and-off on my years long project, I came across an S3 Trio 3D. A fairly common graphics card, though not as common as the Virge (which I have too, unluckily for me). Veterans will probably remember the Virge line as the decelerator of its time, and Trio wasn't much better.

General agreement is that the Virge was trying to accomplish too much with too little. PC players at the time were barely playing with perspective correction, and that was already something: PS1 and Saturn didn't even support it. Still, the Trio is more modern. You'd think it could have done better. It didn't.

Well, it didn't, but what a strange failure it was. It's really kind of impressive to see a 4MB, very cheap 2D/3D card properly supporting not just bilinear filtering, but also mipmapping and trilinear. And it does so quite well too, unlike other budget cards of the era. As a matter of fact, if you don't mind playing in 320×240, you'll end up getting decent speed with much more features compared to software rendering. Well, if you can deal with the 15-bits colors and resulting color banding.

Video memory is indeed especially low. And with S3 only assigning a mere 1.5MB to textures, you can forget about great results. But let's face it, the card is way too slow to run anything above 320×240 anyway. I used 640×480 only for testing, but it was clearly not meant for this kind of hi-res.

With 3DMark99 set that high, weird things start to happen. Texturing Speed is especially strange. Don't be fooled by the results up there: the card is only rendering a small part of the test plate. Other cards usually drop to a crawl, but still pass the test. It seems like the Trio doesn't even try to swap, simply omitting the repeated texture instead.

The filtering test also shows something interesting. There isn't nearly enough memory to properly render the graphics, but notice how mipmaps are working, and even trilinear is supported normally. What were they trying to do, supporting this stuff in a low-budget card? You can even see in the results screen that texture filtering comes with some of the heftiest performance drops I've seen this side of the ATI Rage II.

Who knows what they were thinking. But it's quite impressive nonetheless. Games run (or better, don't) like molassa, textures aren't rendered, extra features are way too slow… but filtering itself seems to work fine. Mind, it was 1998, so perhaps not supporting those features would have made S3 a laughing stock in the market. But they pretty much were one already, so why bother?

My tests aren't over, so I'm sure I'll find other interesting results. I've seen a few already (how did the G100 support multitexturing when even the G200 didn't?), and it can only get better. For example, I have that Matrox Mystique waiting…

How reliable is 3D Mark 99? I don't really know. Testing a few graphics cards gave me some unexpected results, to say the least.

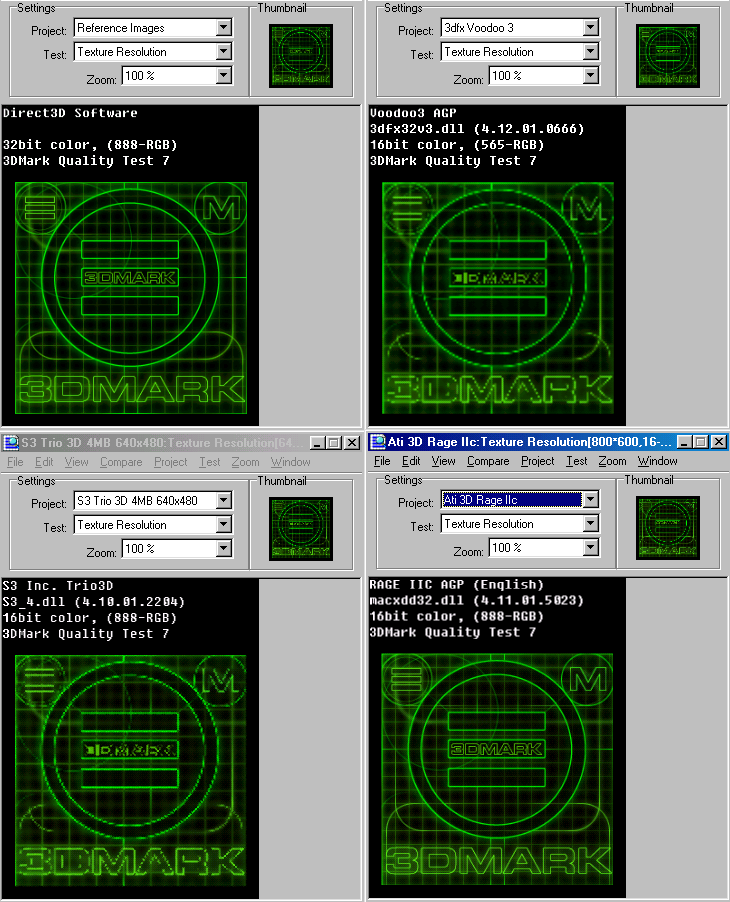

To start with, here are the reference pictures:

So without further ado, let's start. We have already explored the Voodoo 3 last time. Once again, I'm gonna put up the most notable differences, rather than all of them. It saves time, after all.

S3 Savage 3D (8MB)

A relatively obscure graphics card that came out in 1998. Even at the time, its speed was nothing to write home about. Most of the problems were due to bad drivers. My benchmarks put it below the G200, at least when OpenGL is involved. It recovers in Direct3D, but not without rendering issues. At least for this test, however, we'll only be looking at image quality.

Notice the Engineering mark at the top: I was using some experimental 1999 drivers. The original drivers were a lot buggier. But this is a good showing overall. Pretty much all of the other images were the same as the references. For a card cursed with horrible drivers, this is quite impressive. Performance was pretty good as well. If it didn't cause a slightly overblown brightness on my monitor, I might even use it regularly.

Ati Rage LT Pro (8MB)

Pretty much a laptop card, so the low performance is to be expected. Don't be fooled by the best version of Tomb Raider available: the Rage LT Pro is quite slow. It should, however, have all of the modern features of the time.

But something is wrong.

Imae quality is overall okay, but something is clearly wrong with filtering. Either bilinear doesn't work as it should, or mipmapping is the one to blame. The tests seem to point to bilinear, but the game images seem to point to mipmapping as the problem. Of course, it could also be that the issue is 3D Mark itself.

Either way, it's not unheard of for these budget cards to mess up their bilinear implementation.

Intel 740 (8MB)

The nightmare of the late 90's. A graphics card that was created to solve the problem of limited texture memory, and that in the end suffered exactly with textures. You'd think someone would notice during development. At least it was strong in polygons, its price was very low, and to be fair, image quality was usually pretty good.

Enough. All the other images were essentially gray or black screens anyway. I can't really explain this… previous tests with the Intel 740 were good, and I was using the same drivers as always. Maybe something happened to the chip. I really doubt that's how the card would normally do.

Well, let's move to the last card of the day.

Matrox Productiva G100 (4MB)

This little baby is effectively a Matrox Mystique with bilinear filtering support and more memory, not to mention an AGP connector. This is actually quite interesting, since I'm pretty sure the G100 shouldn't support AGP texturing, but it was able to render up to 16MB textures somehow. And it was faster than the Savage3D in that!

That aside, OpenGL support is still missing, and alpha stippling is the best you get. So don't get your hopes up there. Matrox is always great when it comes to image quality though, so perhaps they can do something here.

I guess there's only so much you can do with limited features.

One thing to point out, is that the Rage LT Pro actually showed semi-proper filtering in 3D Mark 2000 instead (and of course was running horribly slow). So I have my reservations about the reliability of 3D Mark 99. And of course that Intel 740 result is anything but acceptable.

Anyway, outside of the Savage 3D, nothing is really doing well enough. And of course they are real slow as well. No reason to change my Voodoo 3, then.

Just today I read that Futuremark offers all of their older benchmarks for free. Most of these come from the time when the company was still called MadOnion. The logo also looked like an onion, sort of. I liked that. I wonder why they changed it. Anyway, I took the chance to download 3DMark 99 Max, 3DMark 2000, and 3DMark 2001 SE. Anything newer would not be useful to me, as the video cards I could test are probably too weak for even the 2001 version anyway.

I dabbled a bit with 3DMark 99 Max, and while the testing scores are the same as always, one cool feature of the registered version is the ability to compare your graphic card's output to a reference image provided by MadOnion, to show the differences in rendering quality. So here are some results with my Voodoo 3. This is not all of them, I took the most interesting ones.

Stats are listed on top. You can see the use of decent texture filtering in the reference image, although I can only guess it's a mere 2x AF, which was already impressive at the time. The Voodoo 3 image, on the bottom, is recognizable (other than, ehr, the name on it) by the 16bit color attribute on top. The image does look more dithered – perhaps the claims that Voodoo 3 could do 16bits with almost the same quality as 32bits were a bit exagerated. Notice also the lower filtering quality, and remember the V3 didn't support anisotropic filtering.

Now a few more assorted pictures.

The last test is surprising, though perhaps not so much. Obviously a benchmark would be cutting edge for its time, and the Voodoo 3's 16MB of memory were not quite the best anymore in 1999, with several cards sporting 32MB. I have already showed the results of this slight difference in my Tonic Trouble test. But this seems much worse, and I wonder how much of the difference can really be explained by texture memory.

To find the answer, the only choice is to try the remaining benchmarks, and a few other cards. So, many other tests await in the future. At least now I have something to do.

Writing about whatever comes to mind

Source: https://b31f.wordpress.com/tag/3dmark/

0 Response to "Easy and Cheep Why to Incress 3d Mark"

Post a Comment